Ever since the development of Showscan in the early 1980s, Trumbull has harbored a dream of high-resolution, high-frame-rate production and distribution.

Back then, it was an idea that was a bit before its time, but today, with the advent of digital cinema and technologies like NVIDIA Quadro professional graphics processing units (GPUs), that vision is now becoming a reality.

“The power that’s available through GPU computing and the current generation processors is having a radical impact on overall production costs,” said Trumbull.

Trumbull envisions a production pipeline where directors can shoot in a greenscreen virtual-set environment and almost immediately review the shot with all of the VFX elements rendered in real time, in stereoscopic 3D running at 120 fps.

He has been pioneering this new film production paradigm – a concept he has loosely dubbed “Hyper Cinema” – and experimenting with a combination of virtual-set technologies and real-time, high-frame-rate stereoscopic display technologies.

Trumbull turned to long-time collaborator Paul Lacombe, CEO of UNREEL– an augmented reality and virtual set systems integrator and developer – to help realize the Hyper Cinema concept and design technologies for the new studio. Unreel has deployed virtual sets at numerous broadcast facilities ranging from top broadcast and cable networks like CBS, ESPN and CNBC, to local TV broadcasters across the country. While the challenges of 4K, stereoscopic, 120 fps and photorealism are unique to the film industry, the concept is analogous. It all boils down to computational horsepower.

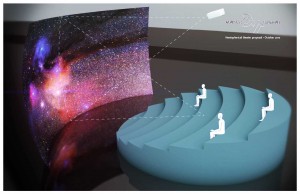

Trumbull has recently built a state-of-the-art production studio on his property in Berkshire Hills, MA. The studio features an 80-foot greenscreen stage and an adjacent screening room equipped with a special hemispherical high-gain Torus film screen, where he can review high-resolution, 3D footage rendered out in real time or near real time. The screen is curved to reflect more light back to the audience and offers a much wider field of view than traditional screens, delivering a more immersive experience.

Trumbull’s facility combines an impressive array of cutting-edge technologies ranging from virtual-set, motion-tracking and motion-analysis systems to 3D stereolithographic printers for creating physical miniature models.

“As I swing back into directing and producing films, it’s a place to do a lot of frontier-pushing experiments, where we can shoot and then screen material immediately in this new format,” explained Trumbull. “It also allows us to experiment on stage and see the results immediately on the screen to evaluate if the camera angle is correct, the movement of the camera is right and the actors are framed properly.”

He stressed that “the mantra around here is that, if it’s not real time, then it’s got to be near real time, which means we see the live-action foreground and computer-generated background, so that we can make aesthetic and editorial judgments and proceed with production very rapidly. I really like working with real actors and actresses, real makeup and real wardrobe, but since you can superimpose anything in real time, my rule is: no real sets, no real locations, just real people. It allows me shoot everything on one stage, in one place.”

Trumbull’s “Hyper Cinema” workflow raises some immense challenges. Keying and compositing live-action footage shot on a virtual set with a mixture of CG and miniature plates requires intense processing horsepower throughout the pipeline. But when you increase the frame rate to 120 fps and resolution to 4K, and then double the amount of data for stereoscopic 3D, the challenges are even bigger. UNREEL has been working closely with Trumbull to tackle this problem.

“Shooting live action at 120 frames and getting the throughput to project at 120 frames is actually harder to do than real-time CG, because in CG, you can limit your polygon count and limit your texture maps to get the throughput that you want,” explained Lacombe. “I try to take the horsepower that NVIDIA can provide and use it to dramatically cut costs. The net impact is that every step in the production process is accelerated.”

With live-action footage, one of the key computationally intensive tasks is debayering raw image data from cameras like the RED EPIC, ARRI ALEXA or the Phantom 65. And soon, there is a plan to add color correction into the pipeline as well.

Trumbull is preparing to direct a science-fiction film to demonstrate the full potential of high-frame-rate production and his “Hyper Cinema” concept – his first feature film since 1983 when he directed Brainstorm, (which was originally supposed to be shot in the Showscan format as a proof of concept).

“It’s a new cinematic language, which calls for different kinds of camera angles and movements, different selection of lenses, different kinds of action and framing, and a different editorial pace, because the result of 120 frames in 3D on a very bright screen is like being inside a movie rather than looking at a movie,” said Trumbull. “It’s a very intense, participatory experience. What I’m trying to do is show the industry how we could substantially improve the movie-going experience for the public.”

While he’s not prepared to reveal the name of the new film yet, he admits that he has tailored his script to make the most of this new media and workflow paradigm.

“When you want to introduce a movie process like I’m trying to do, I feel that it’s incumbent on me to direct it myself. I’m trying to explore this new cinematic language where the audience feels as though they’re actually there with the characters and part of the adventure,” he said.

In fact, according to Trumbull, one of the key challenges the film industry faces today is developing a compelling in-theatre experience to reinvigorate slumping box-office numbers, which were at a 16-year low last year.

With younger audiences more likely to watch a film on their laptops than in a theater, Trumbull predicts that “we’re going to see a lot of turmoil in the exhibition business over the next few years as the industry transitions to a movie experience that’s so spectacular that you can’t get it on your laptop or smartphone, so that people come to a theater expecting something amazing, enveloping and powerful – all the things you can’t get on a small screen.”

Another key challenge is delivering that level of quality on a budget that is significantly smaller than the $200-million blockbusters that the major studios seem to bet on these days.

NVIDIA’s Quadro GPU technology has become a key enabler throughout the pipeline, impacting almost every stage of the production process.

“NVIDIA technology really comes into play as one of the big accelerators of this process,” said Lacombe. “We have NVIDIA Quadro cards in almost every computer on site, doing all kinds of graphics and parallel computing acceleration for high-throughput, high-bandwidth, 120 fps 3D material.”

That includes traditional VFX workstations running Autodesk Maya, 3ds Max and Rhino 3D, as well as a unique new adaptation of virtual set technology that encompasses camera tracking, keying and real-time rendering.

“We’ve got 16 motion analysis cameras up on the ceiling of the stage – the same type of cameras used on Avatar, or any other motion-capture system,” said Lacombe. “We use them to track our camera motion, so we can actually go into a virtual set with a hand-held camera and have an absolute lock between the foreground and background images with no slipping or sliding.”

“The rendering is all based on NVIDIA Quadro hardware,” explained Lacombe. “That can bring you to a certain level of real-time quality, (1920 X 1080 HD), but now Doug wants to go to 4K and do 120 fps and do stereo, so he’s really pushing the limits. To do that, we’re capturing real-time metadata, (timecode, camera tracking and the environment), and taking that into a ‘near time’ process where we can turnaround results in a matter of minutes, so that Doug can say whether he’s going to buy a shot and move on, or re-shoot. He can quickly render out a shot at 4K stereo 120 fps using the GPU to accelerate the process.”

“The key now is to try to up-res the real time output and to take it to the next level,” Lacombe added. “That’s where GPU acceleration using Maximus is going to play a key role.” NVIDIA Maximus technology enables a single, general-purpose workstation to not only render complex scenes immediately – thanks to the NVIDIA Quadro GPU – but also to harness the parallel-computing power of the integrated NVIDIA Tesla C2075 companion processor to perform multiple compute-intensive processes simultaneously.

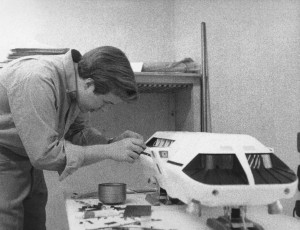

Trumbull explained that wherever possible, he prefers to shoot miniature models and composite them into a shot rather than relying on a purely CG environment. “For a synthetic environment, it’s much less expensive to build miniatures than do full photorealistic CGI,” he explained.

But Trumbull’s on-site model-making shop is a virtual one. Models are designed in modeling software like Autodesk 3ds Max, Maya or Rhino 3D and printed to 3D stereolithographic printers, which build a physical model with special polymers – a process that is also powered by an NVIDIA Quadro pro graphics card.

“We’re actually using NVIDIA products in the way we make the models, which is quite an interesting new way to go about it,” said Trumbull. “When we paint them and light them with real lights, they look like real things rather than synthetic computer graphics, so we can get rid of a lot of manual work in building miniatures.”

Trumbull explained that none of this would have been possible without a GPU-accelerated pipeline. In fact, he reported that: “The first time I was even able to see 120 frame 3D was with a Quadro-powered server.”

Trumbull explained that he plans to prototype and debug his film in the pre-vis stage, borrowing a technique from the animation industry where films go through several major revisions based on storyboards and animatics, long before the actors are even signed, or the animators start to work.

“Being able to work at a higher resolution with more realism is what drives down overall production costs,” said Trumbull. “We’re going to rehearse live actors in virtual environments – not our principle cast, but good actors – just to debug the whole movie, so we can make whatever iterative changes need to be made long before we build our real virtual sets or even hire a real cast.”

But even if the sets are rough and the cast is a placeholder, during these rehearsals, Trumbull will be recording metadata of all sorts, including DMX data from the lighting systems and motion control camera moves. He explained that at this stage, “We need to be very frank with one another. The internal creative process is that no one should feel intimidated to say, ‘that’s a crummy line of dialog’ or ‘we should delete that sequence.’ That leads to a very high quality in the finished product. I’m trying to bring that same method or point-of-view to live-action rehearsals.”

With this unique “Hyper Cinema” approach, Trumbull expects that, “when we come back to actually shoot the main production, we hope to get 50 or 100 set ups per day and shoot the whole movie in a couple of weeks.” He anticipates that the efficiency of this approach will save as much as 75% of production costs over traditional workflows.

“We wouldn’t be anywhere near where we are now if it weren’t for the NVIDIA Quadro card,” said Trumbull. “It’s been a huge enabler for this whole philosophy, and fortunately for us, it just gets better and better. With these dramatic increases in performance, every stage of production is accelerated and the quality and cost of production continues to go down.”