SIGGRAPH 2016, a convention showcasing the latest in computer graphics and interactive techniques, will once again present its Technical Papers program. With the tagline “Render the Possibilities,” SIGGRAPH 2016 will be held at the Anaheim Convention Center, 24–28 July 2016.

SIGGRAPH’s annual Technical Papers program is meant to be a platfrom for disseminating new

Jungdam Won, Seoul National University

Jehee Lee, Seoul National University

scholarly work in computer graphics and interactive techniques. As a preview of this year’s upcoming conference, the program trailer is available on the official ACM SIGGRAPH YouTube Channel.

Submissions to the Technical Papers program are received from around the world and feature never-before-seen scholarly work. SIGGRAPH 2016 accepted 119 juried technical papers (162 total; out of 467 submissions) for this year’s showcase — an acceptance rate of 25 percent. As per SIGGRAPH tradition, the papers were chosen by a qualified jury comprised of members from academia and industry experts.

Qi Sun, Stony Brook University

Li-Yi Wei, University of Hong Kong

Arie Kaufman, Stony Brook University

“Among the trends we noticed from this year’s submissions was that research in core topics continues while the field itself broadens and matures. We saw nine papers on fabrication, and more synergy between topics such as machine learning, perception, and high-level interaction,” said SIGGRAPH 2016 Technical Papers program chair John Snyder.

Of the 119 juried papers, the percentage breakdown based on topic area is as follows: 33% geometry/modeling; 19% image; 14% animation; 12% rendering; 10% physical simulation; 5% displays/virtual reality; 5% acquisition; and, 2% interaction.

The SIGGRAPH 2016 Technical Papers program includes:

Shadow Theatre: Discovering Human Motion From a Sequence of Silhouettes

Jungdam Won and Jehee Lee, Seoul National University

Shadow theatre is a genre of performance art in which the actors are only visible as shadows projected on the screen. The goal of this study is to generate animated characters whose shadows match a sequence of target silhouettes.

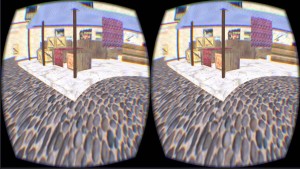

Mapping Virtual and Physical Reality

Qi Sun and Arie Kaufman, Stony Brook University; Li-Yi Wei, University of Hong Kong

This work maps a pair of given virtual and real worlds to support real walking in HMD-VR applications via novel 2D mapping and 3D rendering methods.

Flow-Guided Warping for Image-Based Shape Manipulation

Romain Vergne and Georges-Pierre Bonneau, Université Grenoble Alpes Centre national de la recherche scientifique, INRIA; Pascal Barla, INRIA; and, Roland W. Fleming, Justus-Liebig-Universität Gießen

This paper presents a method that manipulates perceived object shape from a single input image. It produces the illusion of shape sharpening or rounding by modifying orientation patterns, strongly correlated to surface curvature. The warping algorithm produces convincing shape manipulation results for a variety of materials and lighting environments.

Beyond Developable: Computational Design and Fabrication With Auxetic Materials

Mark Pauly and Mina Konakovic, École polytechnique fédérale de Lausanne (EPFL); Keenan Crane, Carnegie Mellon University; Sofien Bouaziz, Bailin Deng – University of Hull; and, Daniel Piker

A computational method for interactive 3D design and rationalization using auxetic material (material that can stretch uniformly up to a certain extent) that allows one to build doubly curved surfaces from flat sheet material.

AutoHair: Fully Automatic Hair Modeling From A Single Image

Menglei Chai, Tianjia Shao, Hongzhi Wu, Yanlin Weng, and Kun Zhou, ZhejiangUniversity

Introducing the first fully automatic method for 3D hair modeling from a single image. The method’s efficacy and robustness is demonstrated on internet photos, resulting in a database of 50K 3D hair models and a corresponding hairstyle space that covers a wide range of real-world hairstyles.

SketchiMo: Sketch-Based Motion Editing for Articulated Characters

Byungkuk Choi, Roger Blanco I. Ribera Korea, Seokpyo Hong, Haegwang Eom, Sunjin Jung, and Junyong Noh, Korea Advanced Institute of Science and Technology; and, J.P. Lewis and Yeongho Seol, Weta Digital Ltd.

A novel sketching interface for expressive editing of motion data through space-time optimization.