When you watch Peter Jackson’s docuseries The Beatles: Get Back for the first time (or the 20th, as some may be wont to do), you’ll surely be amazed by how great it sounds, though you might not know the behind-the-scenes efforts that went into creating that glorious sound.

A team of sound editors cleaned up audio from a number of different sources, synced it all up, and gave the sound mixers enough separate elements to balance things out. The sound editing team has already won awards from the Cinema Audio Society (CAS) and Motion Picture Sound Editors (MPSE) for their work, and Below the Line recently had a chance to talk to three of its members — Supervising Sound Editor Martin Kwok, Supervising Sound Editor and Re-recording Mixer Brent Burge, and Dialogue Editor Emile de la Rey — about what was involved with making the series sound as good as it does.

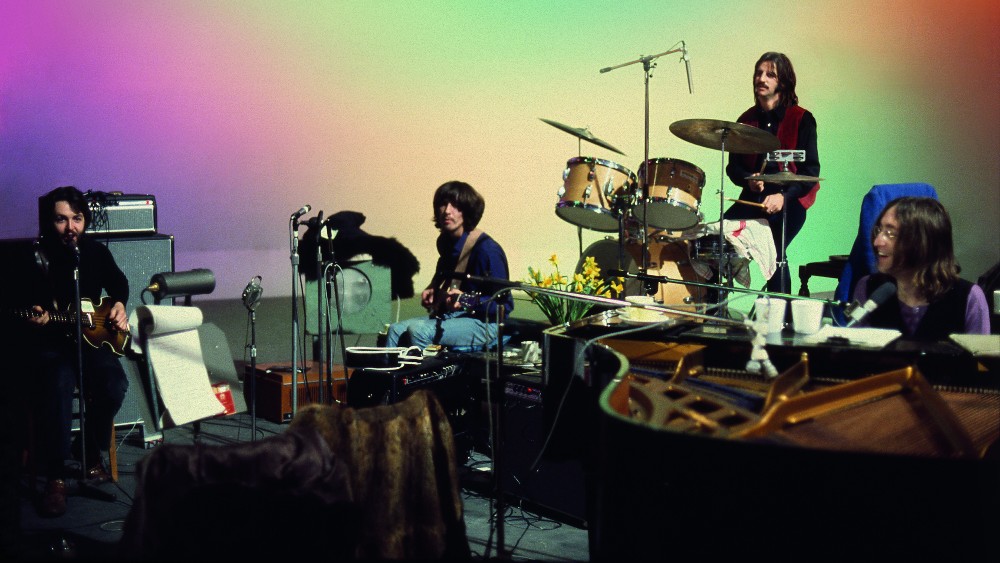

The trio previously worked with Jackson on his 2018 World War I doc They Shall Not Grow Old, which involved creating sound and dialogue for silent reels from the early 20th Century. For Get Back, they had to take varied sound sources, particularly ones from the filming of Michael Lindsey-Hogg’s 1970 doc Let It Be, clean them up, and then sync them so that the viewer could experience what it was like to be in the room with the Beatles as they rehearsed for a potential concert and wrote material for what would become their final album, also titled Let It Be. It would involve creating new proprietary machine-learning technology dubbed “MAL.”

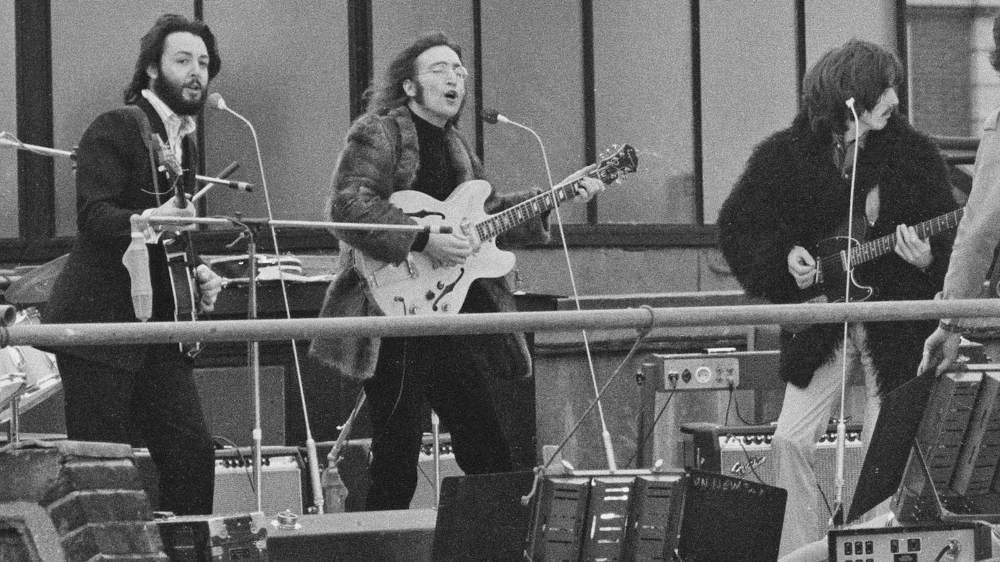

Get Back concludes with the Beatles’ famed rooftop concert atop the Apple Corps building, lovingly edited together from 10 different cameras, as George Martin and Glyn Johns were recording the performances in a studio set-up in the basement of the building. This interview is similarly epic in scope and length, but if you’re interested in the craft of sound, or simply a Beatles fan — and really, who isn’t? — I promise it’s worth the read.

Below the Line: I have to imagine that Get Back was a different challenge than They Shall Not Grow Old. In this case, you had audio to work from rather than having to create an all-new soundtrack from scratch. Are you involved with baking the tapes and transferring them to digital for you to clean up and edit, or is that process done before it gets to you?

Martin Kwok: As far as the original ingestion, the tapes were baked at the Apple end, I believe through Abbey Road, and it happened at various stages. I think initially, it was going back to the earlier 2000s, where they started to look at the idea of restoring Let It Be, but it then ramped up dramatically as they got closer to the 50th. Probably about [a] handful years ago, there was a pass made where all the original Nagras were re-digitized and thrown into an AVID. An initial assembly was happening over in the UK, and it was the team from Apple, who had seen the work that Peter had done on that and thought, ‘This could be a way to take things.’ They spoke about the work and Peter got involved, and at that point, the media that was digitized was turned over to Wingnut [Jackson’s production company and post facility], and then the continuing process of finding audio that Apple didn’t even have from various pockets of the web, so to speak, and just deep-digging and original film set mag was used to find some of the little gaps that we had, until pretty much the most comprehensive timeline of that month in January 1969 was available to the picture team and us in sound, as well.

BTL: I’m not really familiar with what film sound was like in 1969, but I assume all the audio was separated from the film, or was some of that already synced together for you?

Kwok: Yes, and with that came some challenges: nothing was crystal-locked to the picture, so there were two 16mm cameras running independently of each other and independently of all the sound recordings. There was generally a sound recordist, Peter Sutton, who was trying to track, if you will, the film crews’ setups, and then there was also Glyn Johns and George Martin, who were charged with setting up a recording process, which over the course of that month, sort of evolved into initially a rehearsal setup they never really tracked properly with regard to eight-track recordings or anything at Twickenham [Studios]. But when they moved to Savile Row, the Apple Corps headquarters in central London, that’s when they set up a makeshift studio in a remarkably fast time to get the eight-track recordings that we now know make up Let It Be. Those were two completely separate recordings and as best as possible, Peter Sutton tried to get as much audio in the can as he possibly could. That entailed building up close to 150 hours of audio, whereas it was about 60 hours of film recorded, so there was a lot of stuff that was on Nagra tape that there was no picture, for example.

Brent Burge: Just one thing to add to what Marty was saying is that you have to remember that this was all freeform in terms of how they were filming. I didn’t even know how Peter Sutton knew when they actually had the cameras running, for example. I think they’re all pretty much just trying to capture whatever they could on the Nagra. They had some discussions around it, but they were using tricks to fool the Beatles into thinking they weren’t filming when they were, for instance. I got involved early with Peter who just wanted to play me and Steve Gallagher, the music editor, an 87-minute cut of Day 4, for example, which was the first we saw. Those were early days where we got to see where Peter was traveling in terms of what he was trying to set up and also the quality of the audio, which was challenging at the time, because we were just literally listening to the mono Nagra tape recordings that Peter had cut together. Slowly, it evolved that Marty came on, and then, as Steve and I worked out a way of approaching the show…. I think in a similar way, we actually kept it quite freeform, because we were dealing with lots of effects tracks, lots of music tracks, lots of dialogue tracks. We were literally using a single piece of mono audio tape, and that’s what we had to then push out into as much as possible, to try and get all the sonic fidelity and anything we could do to make those tapes listenable in an Atmos setting. So that’s where Emile obviously came into play in this as well.

Emile de la Rey: I got involved, I think, in December 2020, so the project was well underway. At that stage, there was a six-and-a-half-hour cut of it that we had to work through. Over the years, I’ve developed some fairly decent approaches to noise reduction and that sort of thing using the tools we have at hand and certainly what we used on They Shall Not Grow Old. But it was a pretty big challenge to try and carve out a mixable track lay from that original mono materials. We kind of dreamt about having this tool that could disentangle it all for us. When I started seriously looking into what was out there, there was a wide variety of quite current research happening in the area of source separation. Basically, myself and Andrew Muir, who worked with me on the machine learning side of things, were able to take the state-of-the-art architectures that people were exploring at the time and build on that to end up with something that works well for our use case, which is high-quality post audio. A lot of the research was sort of happening around quite low-sample rates, nicely-targeted towards telephony, and real-time use. We kind of focused more on the other end of that scale on the quality and the end result and not caring so much about how long it might take to process something. It was quite a journey, and the machine learning ecosystem that we were developing and the models that we were training, kind of grew up with Get Back as the cut developed over 2021. Certainly, the rest of the sound editorial team were very much a part of that whole process because they would highlight these issues that they were having that they would love solved. And then, I was able to — in a quite targeted way — look into how we could do that. So we ended up with a whole machine learning toolkit at the end of Get Back that could do things like split John, from Paul while they’re having a conversation, that sort of thing. Really, really useful, useful tools in general, as well.

BTL: Actually, that is something I was very interested in knowing about. Normally, you might take the mono audio and chop it up into each person talking and then put each of them on their own separate audio track for processing/mixing. But that would take a very long time to do, and there are times when conversations are clearly happening off-mic, as well. I guess they eventually brought in an 8-track, and you had those recordings as well?

Kwok: That’s right, and even with the 8-track recording, you’re still talking about a lot of room bleed, so the separation wasn’t necessarily there in the 8-track either, not for some of the work that we were trying to do. Specifically, what Peter was looking for, as a filmmaker, was to insure that his narrative was intelligible, audible, and being presented to the audience. The fact that the band would be playing, as any band does, jamming away at different volumes, and literally so many other things happening in their environment, those were the different complications and noise floors, which meant following a simple conversation that Peter wanted to highlight was effectively impossible with the original Nagra tape and our traditional methods of sound editorial work and restoration. The work that Andrew and the team put into being able to highlight the issues that we had, and then for the machine learning itself, which we lovingly refer to as MAL after Mal Evans, [the Beatles road manager], but it also stands for “Machine Audio Learning.” We’d be throwing stuff at MAL to learn about these issues, as I was saying, and that literally would be on a day-to =-day level, but as we move through the various days in Get Back, there were new problems encountered. Those were the things that once MAL had figured out how to work with it, we were able to work out how to separate it. It’s not so much about chopping it, as such, not in the way that I see nonlinear picture or audio editing, but more just extracting — it’s just sucking out that element and leaving everything else there or the layers that you want. That, for me, was something that I could not have imagined, certainly 18 months earlier than when we really got into the guts of it at the base of 2021.

BTL: When you get to the end of the project, and MAL starts writing Beatles-level songs, then you have to stop, because it means the machine are taking over.

De la Rey: I haven’t revealed that model yet.

Kwok: Actually, what we were very much involved in was source separation and not regeneration. We’re certainly not regenerating any of their voices or instruments. The scope is massive within machine learning, but that’s not what we were charged with doing. It’s very much being respectful to the source and making sure that, again, the story could be told,

De la Rey: You quickly get used to these tools, and then you want more. Instead of just, for example, separating two voices, then it became, ‘Well, Paul wasn’t on mic, he was in the background. John was in the foreground on mic, but we kind of want them to be in the same space in the mix.’ Some further work was done to train some models to do that kind of shift to bring a voice to the foreground from the background, or to maybe match a piece of bootleg music with a piece of 8-track music. That sort of enhancement, I guess, but certainly not generation. I just wanted to add earlier that I think what made this project especially suitable for technology like machine learning is just the amount of material that we had to work through. My idea was basically doing the bulk of the dialogue editing while I was working on developing this tech. It’s a huge job, as I’m sure you can see.

Burge: But the other thing to add is that Emile was developing the tech, as we were doing the job. So it wasn’t a case of us being presented with the show and Peter saying, ‘Okay, here we go,’ and Emile walking in and saying, ‘Okay, here we go. We’ve got the technology framework, and now we’re just gonna….’ No, we were discovering all these discoveries of what we could do, which then would actually inform how Marty would have to change his workflow, to deal with the way things changed through the job. That was actually a really evolving process.

Kwok: It happened at a breakneck speed. I think it was probably about six weeks from January into February of 2021, where the R&D was in full swing, where Emile and Andrew were trying to build models that would work with the data sets that the rest of the team were trying to create. As things took shape, we realized there’s something in here, but as Emile had mentioned, in terms of its resolution, it was not where we wanted it to be for our mix days. Initially, we thought, ‘Well, okay, it’s not returning at the audio quality that we want, but it could be used as a sprinkling on top of the current Nagra material. At least there’ll be a little bit more definition.’ But as things got cleaner and clearer and amazingly, as Emile got us up to 48k, it became very evident that the work that we had already done, and had already started to present to bring onto the mix stage and Mike Hedges was not going to work, because the technology had already taken us forward to a point where I realized I’ve got to change my entire editorial approach. Machine learning is the baseline now, and that was one of those, “Okay, I’ve got to take two or three steps back, but once I move forward, the world opened dramatically.” The same can be said for the way that Steven Gallagher worked within the music editing, where, initially, it was about trying to find ways to get this voice out of this dirge of track, so we can hear the narrative. But then, we got greedy, and we started to figure out what those instruments were doing — the good, the bad, and the ugly of it, and letting MAL hear at all, and figure it out. That then influenced the way that the music editorial side could be helped, as well, particularly with regard to providing the mixers with a sense of the ability to balance and specialize.

BTL: I do want to ask about the workflow between you and the film editing department. They obviously had 60 hours of film, as you said, and for the rooftop concert, they were working with footage from 10 cameras at once. With all that film, would you be cleaning up all of it or did you have to wait and know what they were going to use?

Burge: Because there was such a volume of material, we only could really work on what was in front of us, in terms of what Peter was handing over, and it has to be said that I don’t think I’ve ever worked with a crew of such, not specialists, in terms of what the abilities were, but it was just a perfect distillation of the right people in the right roles doing the work that eventually ended up with Peter having a track, which he was really happy with, I think. There was no fat in the crew whatsoever, and because of that, we were really just spiraling off exactly what Peter would hand over, and we just have to basically be thinking on our feet and moving quite quickly on those kinds of things. The time compressed, once things expanded in terms of the actual length of the project. There were certain things that didn’t change, certain schedules and so forth, that we still had to make. It was a case of us having to work quickly, and gladly, it was the length it was, and it wasn’t the full 60 hours.

Kwok: It’s worth saying that we certainly used the entire 150 hours of audio as a resource with regard to the way that MAL was learning, as well as abilities to go in and find clarity helpers, and different things that can help glue the track together, so we used the entire archive, but we certainly had more than enough on our hands in terms of the picture cut that Peter and Jabez [Olssen, the main editor on the series] were handing over. Also, I think the way that they were operating was being informed by the way that machine learning was developing as well.

Part 2 was the last of the parts to come to us, and that was the one that, at that point, we were able to return rough AVID guide tracks with machine learning to the edit room, which gave Peter and Jabez a lot more scope to cut, I guess, a bit more freely, you could say. Of course, because Peter had spent so long with this material and inside the transcripts, he really knew some of those stories that were buried in there, but he’d never thought he could use them, because they were just covered in a cloak of noise. They suddenly became part of the potential narrative, and so that changed the way he was able to edit.

We saw a lot more of the freedom that machine learning offered swing into Part 2, because as it was developing, they’re smart guys in the editing room. Once they’ve got an idea about what we can do, they’re like, ‘Well, I think we could use it this way.’ It was very much one of those evolutions where picture editorial were writing with us.

BTL: I want to talk about the rooftop concert part of the series. When I saw the concert in IMAX, there was a Q&A where Peter talked about syncing up the 10 cameras, but how did that work on the audio side of things? You obviously have people on the street being interviewed, and I’m not sure how that sounded with the band rocking out just a few stories above the street. You don’t see anyone actually recording the audio on the street, or at least they did a good job staying off-camera.

Burge: That’s interesting; we don’t really see that. There’s a guy up on the roof who had what looked like an 816 set up there, but I guess the other person was the interviewer who was walking around interviewing some of the people downstairs. It was a challenge that just blew our minds when we saw the cut that Peter presented with the split screens. It was just such an awesome real-time experience of the whole sequence. It was so, so good, and we looked for an opportunity to try and utilize the music from the roof and rerecord it in a space here in New Zealand, find a place where we could do it, but it really became logistically quite difficult to do that when you’re playing 47 minutes of the Beatles at full volume through a PA from a roof of the building. It could present some challenges.

But we did end up using a lot of the off-site Nagra recordings that were being recorded by the other cameras. They provided quite a lot of the spatiality of the distance that we had. We spent a lot of time setting up the actual onstage sound, because we were using basically 8-track splits that were done by Giles [Martin], which in itself would be a wonderful thing to see how they set all that up in terms of the cabling down to the control room. There’s a little bit of talkback you can hear in the sequence as well.

But then, it was a case of really getting a sense of that 8-track that was recorded, very close mic on the stage, to make it sound like you’re actually on the stage with The Beatles, which was a great tribute, I think to Mike Hedges, who has experience with live mixing and so forth. He was really after a particular sound of that event when you’re on the stage with them as well as off the stage, kind of using the original Nagras off the stage. A great job was done there, I thought, in terms of getting a real live sound to the recordings that were made, which were great sounding recordings.

Kwok: You did have Giles Martin and Sam Okell [the two credited music mixers] giving that pre-done pass of those stems the Beatles’ blessing, if you will, and then, for us to be able to use it in a surround Dolby Atmos environment and actually spatialize it. It was the perfect combination of knowing that we were representing the work that the Beatles would be happy with, and then actually putting it into a space where Peter Jackson, as a filmmaker, was happy to sort of say, ‘This is the experience I want you to have.’

One of the factors I was really proud of that we pulled off, in terms of the rooftop was there were sections, in terms of the setup, that were shot, but were recorded with no sound. That was a challenge that we were able to sort of again, go into the archives, and just dig out little bits of glue. When I look at it, even though I know what I’m hearing, I actually do buy 95 percent of it. For an average audience, they just wouldn’t pick up the stuff just thrown on to glue these scenes together in terms of the setup. That’s the power of being able to use the machine learning to its benefit like that, but also have, as Emile was saying, the breadth of material to actually use.

BTL: Before we wrap up, do any of you know why Glyn Johns was using four speakers in the basement control room set up in the Apple building?

Kwok: I don’t know. There was a pretty funny joke about it at the time from the guy at EMI who turned up, “I don’t know why you need four of them. You only have two ears.” [laughs] We’ve looked at those speakers and just sort of gone, ‘What is going on there?’ There is some of that which has remained mystical and magical to us, and I think Matt Hurwitz may well be someone who’s actually tried to dig in on that. But I don’t know. We were never given any real explanation.

The Beatles: Get Back is currently streaming on Disney+, and it will be released on DVD and Blu-ray on July 12. You can also read Below the Line’s interview with Editor Jabez Olssen to learn more about the creation of this brilliant docuseries.

(Thanks to Steve Rosenthal from MARS aka Magic Shop Archive and Restoration Studio for providing some informative questions.)